Intro

Our office was one of the first in LA to receive a Magic Leap One (ML1) headset, and I’d had the chance to test out the headset for about 3 weeks at the time of writing this article. Despite the disappointing early reviews from various tech media outlets, I found the headset to be generally well-designed and highly capable. Since much has already been said about the hardware, I’ll be focusing more on my experience with it from a software developer’s point of view.

After briefly testing the ML1’s capabilities, we came up with the idea to combine its spatial recognition, eye tracking, and image recognition functionalities to create a generalized tool for brick-and-mortar retailers to study their customers’ shopping behavior. The goal was for retailers to conduct studies to help them identify areas they can improve on, such as store layout, merchandising, etc. But, before I could get started building this proof-of-concept, I had to get a more in-depth understanding of the workflow on the development side.

Set up

Although it wasn’t too difficult to set up the dev environment on my Macbook, there were a few specific steps I had to follow. I needed a technical preview version of Unity, the Magic Leap Package Manager app, and the optional Magic Leap Remote. The Remote app was especially useful; it allowed direct input from the ML1 headset to the Unity Editor. The Remote app also lets developers who do not yet have the headset to simulate input. Lastly, having the additional Hub, an accessory that allows the Magic Leap to simultaneously charge and connect to your machine during development, was super helpful.

Support

I was quite impressed with the volume of available resources already on their site on the day we received the device. The ML Creator Portal has a ton of well-organized, well-written guides, along with step-by-step tutorials and an API reference to get developers started.

To help those of us (including myself) that learn best from examples, the SDK includes about a dozen sample scenes that each showcase a particular functionality (e.g., hand gesture recognition). Here’s a brief list of those sample scenes: controller inputs, hand tracking, image recognition, spatial meshing, persistence, mobile companion app, eye tracking, plane detection, image/video capture.

Early Problems

I did run into a few issues early on. For one, ML strangely did not have a certification process in place to allow independent developers to deploy to their ML1 device. Although, in this case, they did respond quickly through their forum and provided a temporary certificate. Other issues I ran into included occasional crashes in Unity as well as in the ML1 headset. I also could not get native Unity Analytics events to trigger properly and had to resort to using HTTP calls. Lastly, as developers of emerging platforms know all too well if you run into a problem building for the ML1, it’s much harder to google the solution (assuming one exists).

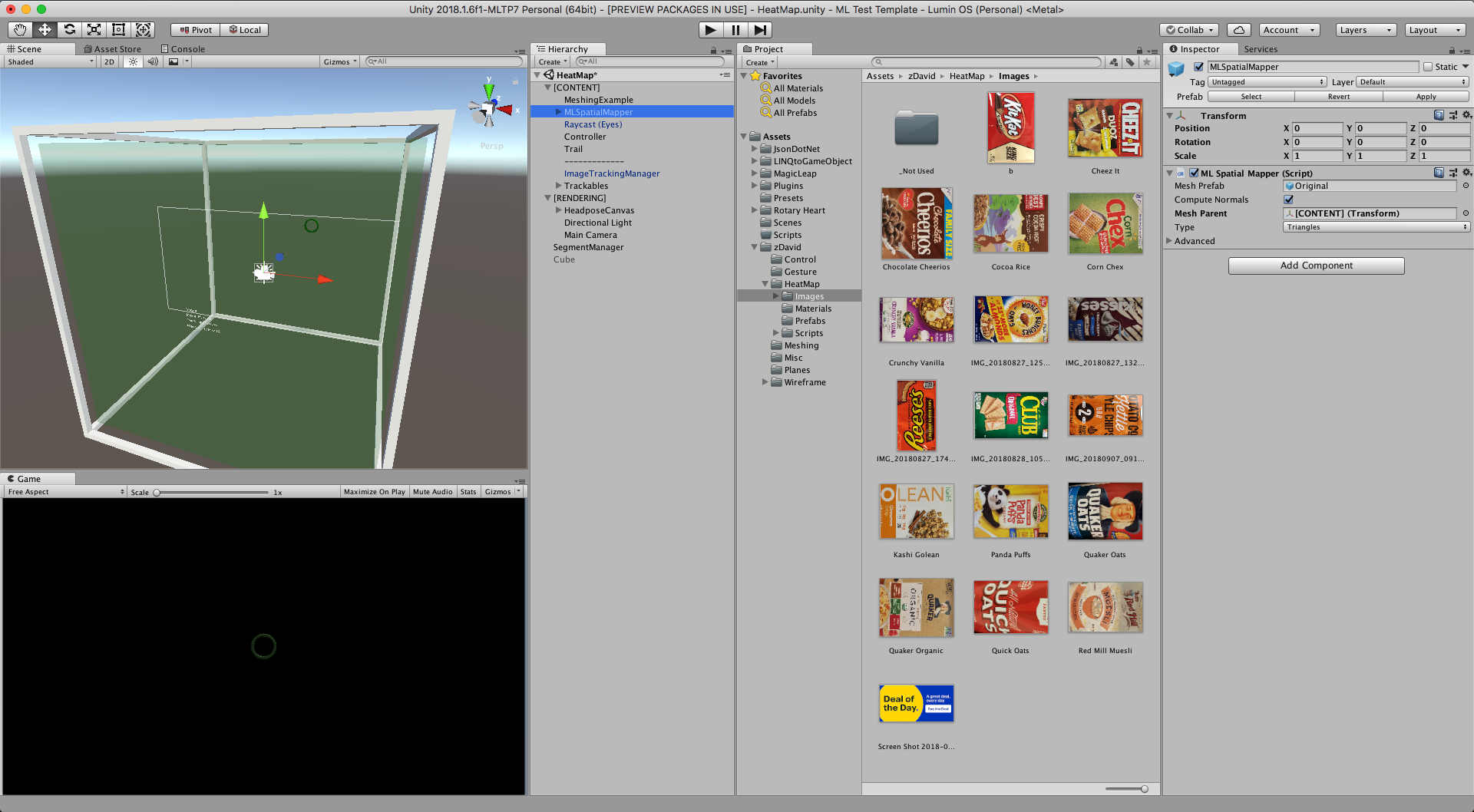

Meshing and Eye Tracking

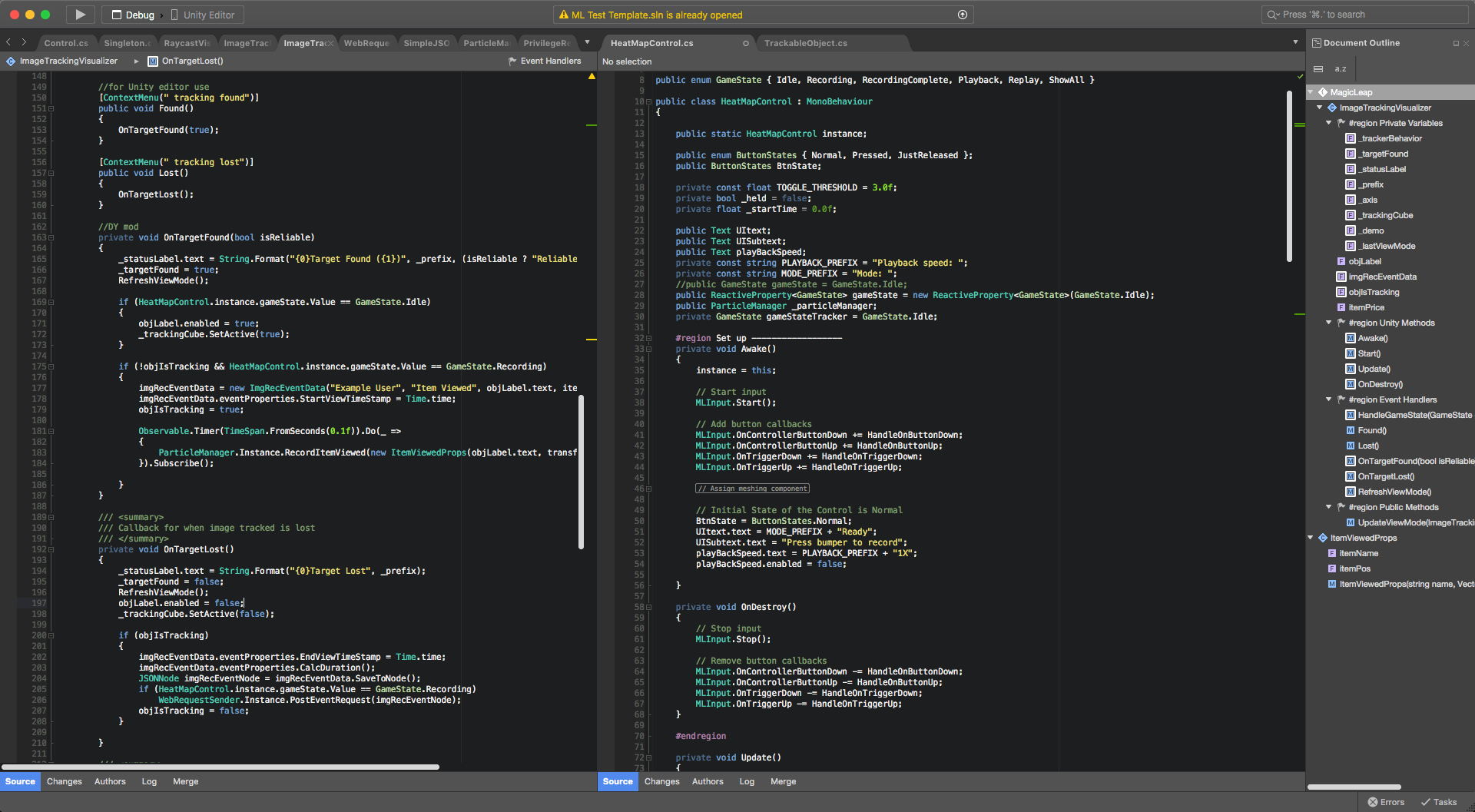

Building on top of the sample scenes that came with the SDK, I was able to mesh out the environment with a wireframe shader, then added eye tracking functionality on top of it. Next, I recorded the coordinates of the users’ eye fixation points in 3D space, then played back those coordinates using Unity’s particle system plus a trail renderer, so the viewer can easily follow the eye movement during playback. The particles stack on top of each other and get brighter the longer a user stares at something.

I was pleasantly surprised to find that eye tracking was fast and relatively accurate. Although there are areas in the FOV where the tracking reticle was offset from my fixation point, I found that for the most part, the tracking reticle updated quickly and accurately. However, that wasn't the case for everyone on our team. Some found the eye tracking was significantly offset to their fixation point, but I suspected that it might have been due to improper fit of the headset.

Image Recognition

We had the idea to incorporate ML1’s image recognition functionality, so not only would we know where, but also what they were looking at. Although the functionality is still limited, as it will only recognize large, flat images. I was able to get ML to recognize some common packaged goods, including crackers, chocolate, and even bags of chips. It did work best with larger, rigid products that have packaging designs that show high contrast.

Analytics

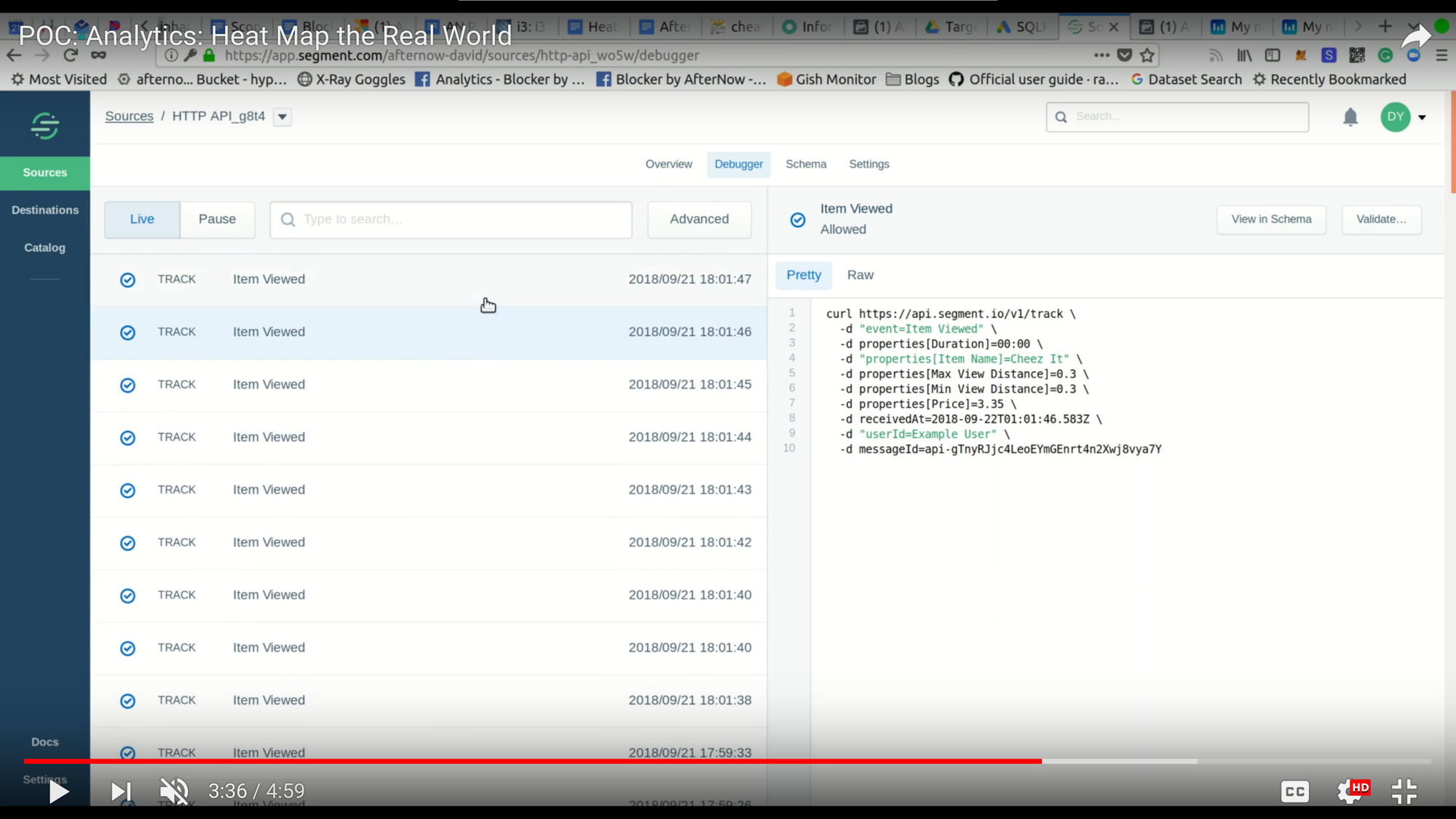

I wanted to add an off-the-shelf solution to record simple events during users’ shopping trips, such as items viewed, view duration, price, distance to item, etc. However, I could not get Unity Analytics to fire and register events. I knew Lumin OS wasn’t an officially supported platform yet on Unity Analytics, and I wasn’t sure if other popular packages such as Firebase would support it.

We eventually decided to integrate the HTTP API from Segment, an analytics middleware provider, then route the data to an analytics platform called MixPanel. I ran into some issues, but eventually got it to work.

It is quite a powerful demo when someone looks at a KitKat bar and immediately sees the item viewed event show up in realtime on the MixPanel dashboard.

Final Thoughts

It only took about a week to build this proof of concept on the ML1. Although still rough around the edges, the folks that we’ve shown this demo to quite liked it and see the potential use for this concept. Retailers have spent millions studying customer behavior in a physical store, which involved having a third party video record customers as they roamed the isles and then manually review those recordings to know what shoppers are looking at--It’s quite a complicated process. Newer methodologies leverage mobile phones, tracking those devices through user-downloaded apps. However, with the aid of eye tracking and spatial mapping, this process could be made much more streamlined and perhaps, more importantly, more precise; creating a true eye-tracked heatmap to show what shoppers pay the most attention to.