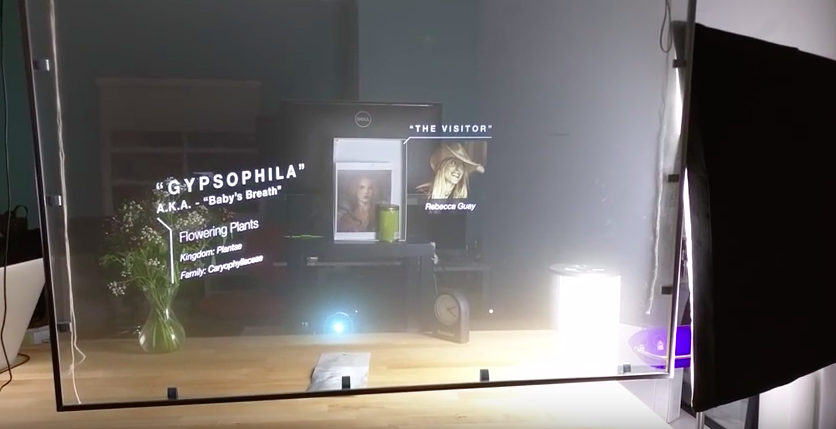

When you walk into our office at HTML Fusion, the first thing you see is a semi-transparent screen about the size of a New Kids On The Block poster. In our impatience to get our hands on commercial hardware, our team has built our own homegrown augmented reality contraption. Using a stereo projector, a semi-transparent screen, and head tracking, our contraption makes computer generated objects appear to exist in the real world. We call it the Holo UI. It provides us with a test bed for developing user interfaces for the future.

A Messy Room

If you want to know the artist of a painting, you have to search for the information via a separate portal such as your laptop or a mobile device. What if instead the information was available without having to go elsewhere to retrieve it? Within an augmented world we can organize information physically, making it immediately accessible. When we make information a semi-permanent part of our space we are able to retrieve it literally with just a glance.

Having so much information physically present in a given space also presents new organizational challenges. Our first Holo UI experiment at HTML Fusion showed us how quickly the environment can come to be cluttered. When every object reports some interesting bit of information, it is easy to imagine how overwhelming the experience can become and the room gets messy. Our question then is, how can a user keep her environment uncluttered while making information retrievable at a glance? Our current design’s focus is on allowing the user to curate information.

The Reticle

In earlier prototypes we found that users did not understand how they were influencing the scene. Even telling users, “If you look at an object, you can get more information about it” was confusing. They needed to understand that their head is the controller. On guns or telescopes there is often a drawing, such as a bullseye or crosshair, that allows you to aim at your target. This is called a reticle (check out what Oculus and Google have written on the subject). This idea of a reticle transfers to our Holo UI. The first iteration of this displayed a small ball that projected two meters in front of the user and followed her head movements. This ball, however, adds clutter to the environment.

In our current demo, the reticle has become a nearly invisible little white dot. In order to do this we have added a hover state that allows a user to discover what items are augmented without being exposed to extra noise. When the user focuses on an object containing information, the reticle gains a white outline to signal the presence of information. The reticle in this state is something we notice only passively and the hover state is unobtrusive.

To make the reticle less distracting we made its depth adapt to the object in focus. We found that when the reticle is at a fixed distance, our eye is drawn away from the object of interest and our focus converges on the reticle. By moving the reticle to the same depth as the object, items in the scene no longer fight for attention. The difference is subtle, but it has a considerable effect on usability.

When the user notices that an object can be acted on, they can click a mouse to get more information. Clicking acts as a toggle. One click displays information about the object. A second click hides the information. While it is still possible for the user to create a cluttered scene, the user now has the ability to curate the scene as well. After curating the scene that information persists, and can be returned to.

Through the looking glass

A third version of the reticle that we are experimenting with is a lens. The user has an expandable circle through which they can view the scene. It is similar to holding up a looking glass. Our idea is that lenses are analogous to apps on our phones, each lens represents one app.

Through a given lens, the user can see a preview of an augmented world. The lens can also be detached from the user’s head, leaving it fixed in the environment. We imagine that in this format, a user could view multiple lenses at a time. This allows the user to look through the lens, peek inside, or ignore them entirely. The lens can also be expanded to occupy the user’s entire field of vision. This would be equivalent to bringing an app to the foreground. Removing all lenses leaves the user with a quiet, uninterrupted view or reality.

What's Next

We plan to continue to investigate designs that empower users to augment their world in ways they find useful without overwhelming them with digital clutter. We’re looking forward to getting our hands on new hardware that frees us from some of the restrictions that our current Holo UI prototype has. With the ability to walk around a room we can really explore how physical space can be used to organize and retrieve information.

What are some ideas you have for keeping your augmented world neat and tidy? Tweet us your ideas @AfterNowLab.