With mixed reality's holographic interplay relying on your scope of vision, you never lose track of your place in the world. And if you're not even sure where that place is, our dev Anthony just created a localized, GPS-style Hololens app that can help.

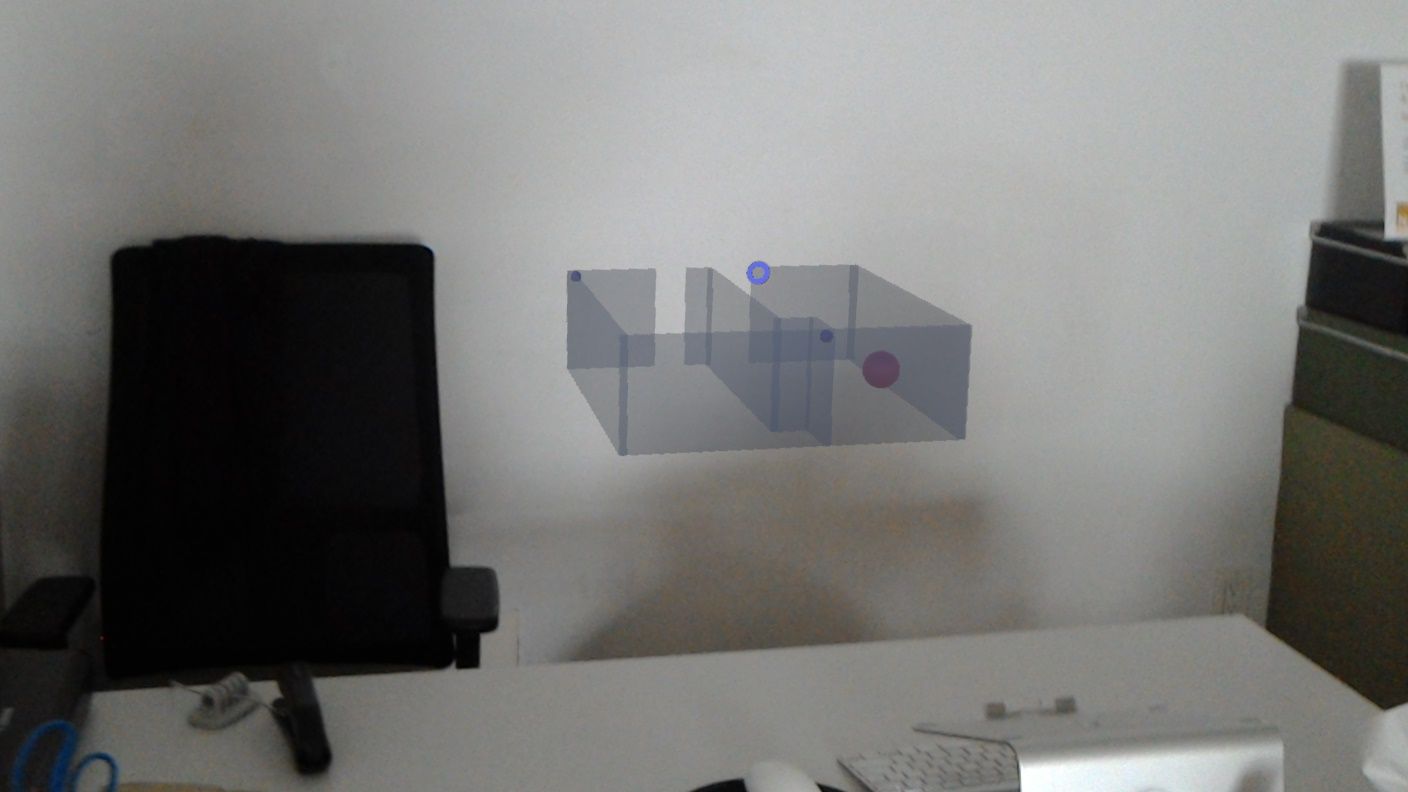

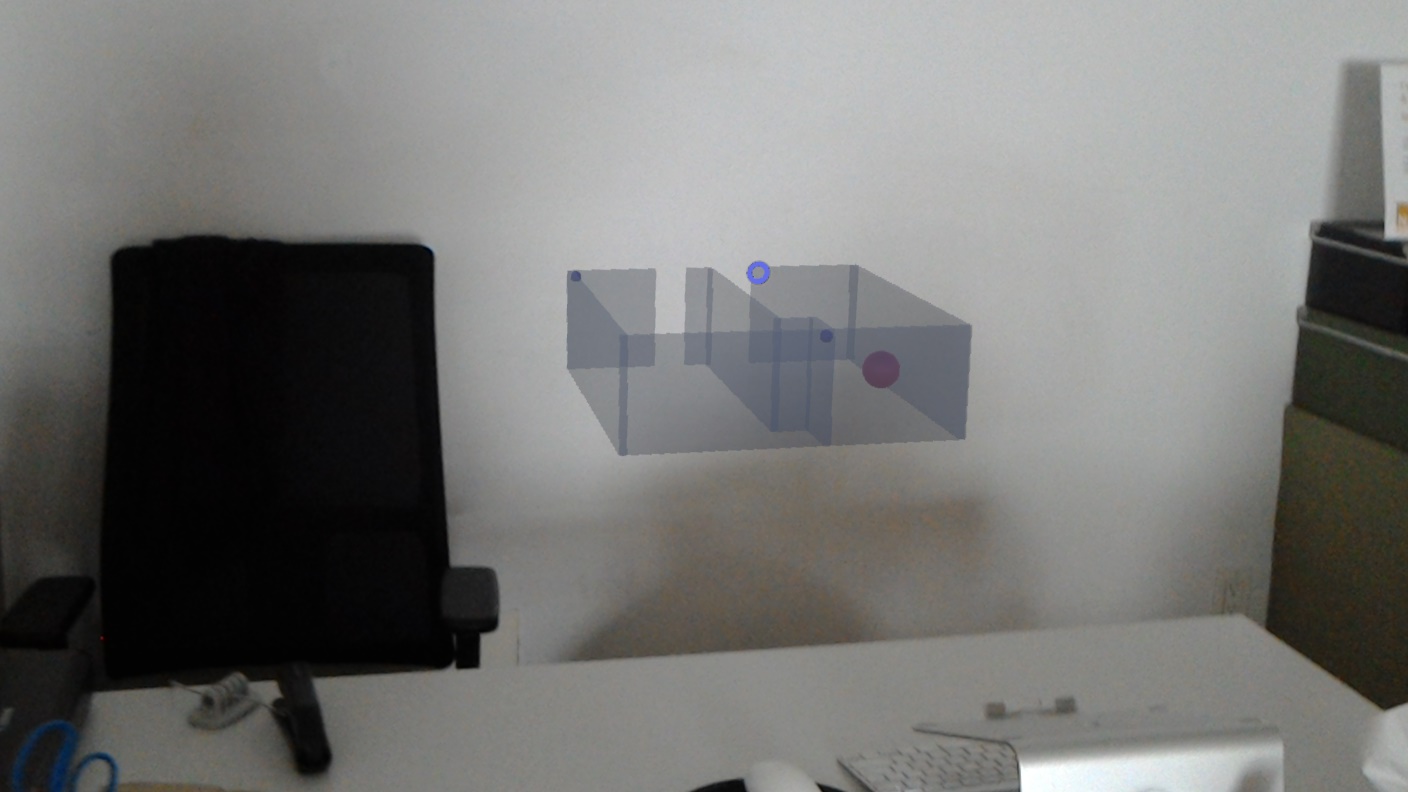

HoloMaps is a mini map that moves with your head about two feet in front of you, just below eye level. A small pink sphere marks your position in a hollow 3D mockup of whatever environment you've mapped -- in our case, the HTML Fusion offices -- and the tracker measures your position relative to an anchor, using that data to update your marker position on the mini map in realtime.

HoloMaps can guide you back to your desk.

The demo sprung from one of our August lab days and has roots in Anthony's love of gaming (see his excellent post on manipulating the Internet of Things by using Minecraft to switch the lights). The demo so far is simple in a way that gives a head nod to anyone who’s ever played Legend of Zelda, and we see a myriad of future use cases, from surveying and search-and-rescue missions to finding your seat at a theatre or stadium, getting through the mall, and paving a flawless path back to wherever you left your car in the parking lot.

Here's Anthony's GitHub link if you're ready to try it.

And here's how it works:

Anthony develops HoloMaps with Unity and C#. He starts off scanning the room with our Hololens, and then imports the room mesh into Unity. Using basic geometric shapes, he creates a maze replica of our offices made up of simple walls, and makes a miniaturized version at a ratio of 1:32 to scale -- though this is completely dynamic, as once the model is built, it can be scaled by changing a variable in the code. This helps the user test the most comfortable size relative to Hololens’ viewing angle without the need to adjust the 3D model by hand.

The "You are here" marker relies on an anchor-based coordinate system. Anthony places spheres called "WorldAnchors," which are the Unity/HoloLens version of a thumb tack, at prominent locations in each real room represented on the map. He then places corresponding, scaled mini-anchors at the same locations on the mini map. Finally, the pink sphere marker is placed in the mini map, and once the app boots up, the program will continuously measure distance and location from user to anchor, with the same scaling factor mentioned above.

So far, the map's execution feels fluid with my movements and has accurately marked my position in each room. As we do more testing there may be some challenges as we expand the map's perimeter. Some other questions that come up: where would the map be most helpful? Should it remain constantly in front of the user? Should the view change when the user is looking a certain direction as opposed to walking?

And, finally: Should we note the location of the piece of tri-force in each setting?