VRLA Mixed Reality Easter Egg Hunt

An unlikely project that became the highlight of VRLA 2017

Intro

Objective

Our objective was to create a mass-scale mixed reality Easter egg hunt using the HoloLens.

Inception

VRLA co-founder, John Root, originally pitched the idea to Microsoft. Microsoft then reached out to AfterNow, Microsoft's HoloLens agency partners, for help with this project.

The original concept looked like this:

Team

Experience design & development:

John Root

Philippe Lewicki

Rafai Eddy

Mike Murdock

Nathan Fulton

Ralph Barbagallo

Bryan Truong

Fon

Adam Tuliper

Set design:

FonCo

To run the experience:

Philippe Lewicki

Rafai Eddy

Jesse Vander Does

Bryan Truong

Kat

Josh

Vanessa

Kim

Diana

Rachel

Execution

The project was green-lit less than 4 weeks before the expo. Without time to spare, all the phases described below ran in parallel, which created a fair amount of synchronization costs.

User Experience Process

Envisioning

Envisioning is the first thing we do in all our projects. The process is structured to get everyone on the same page by collaborating to creating parameters, generate a ton of ideas and ultimately select the best ones.

We have a well structured Envisioning process its flexible in duration between 1 and 5 days.

Given the time constraints of this project, we decided to do a quick :

####Key Outcome From the Envisioning __Goals we set:__

- The experience exceeds the customer's expectations

- The experience creates a lot of buzz, i.e., have the press talking positively about it

- Get 500 people through the experience

Our key constraints:

- No gestures

- No wifi

- Make users forget about the field of view

The MVP will start with:

- Ambient butterflies and birds

- An animated rabbit

- A number of eggs to be found in a limited time

Demo-booth User Flow

We needed to outline the capacity, the movement and timing of people through the booth early on so we could get the construction of the set underway. Users needed to be comfortable waiting, and then we needed to figure out how to fit them with the device.

Key steps in the user flow included:

- Standing in a long line outside the booth

- Measuring IPD (inter-pupillary distance)

- Getting the HoloLens securely fitted on

- Receiving a brief introduction to the experience

- Walking through a 3-minute experience (finding and opening 5 eggs)

- Taking a group picture

- Sharing email and providing experience feedback

Key criteria we needed to accommodate:

- We wanted people to line up at the rear of the booth to make sure the view of the booth wasn't obstructed from passers by.

- We needed to make sure that all HoloLens devices are at the booth at all times.

- It was important to have space for visitors, press and staff to mingle and fill out surveys.

- We needed to do as much as we can to make sure the HoloLenses didn't lose tracking. This ultimately meant taking down some walls between the prep area and the experience so the HoloLenses don't need to transition into a different space.

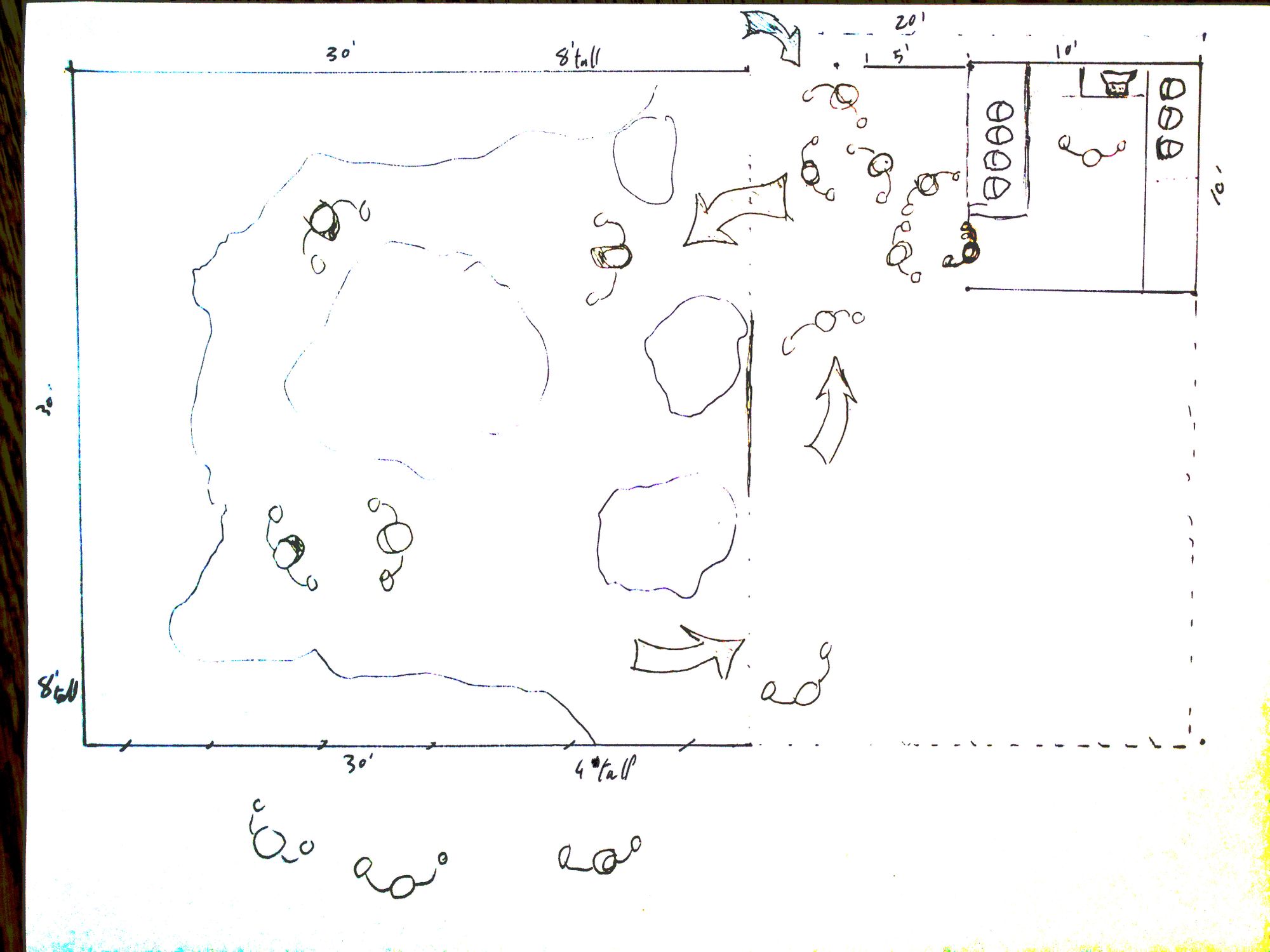

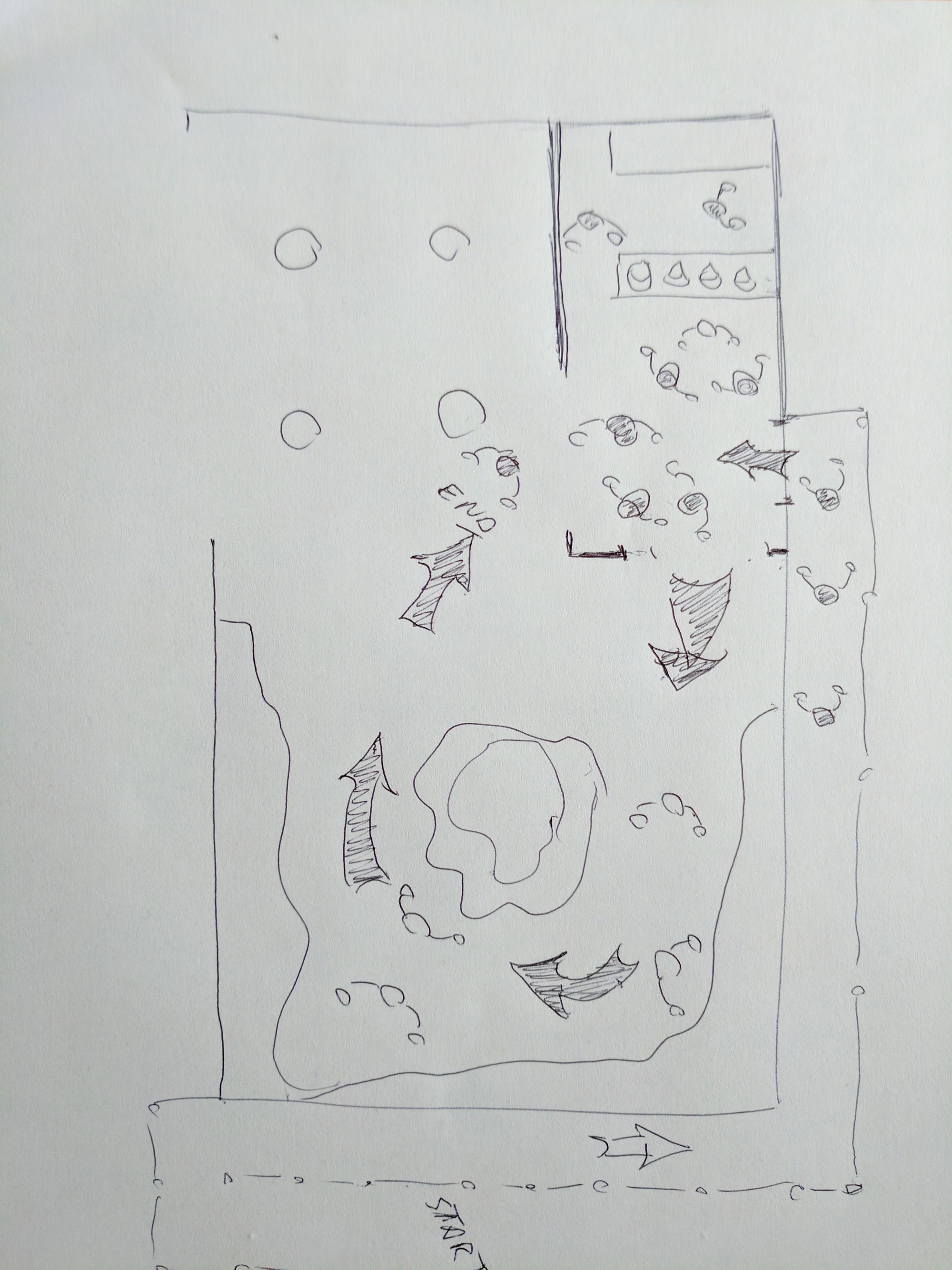

We sketched a few possibilities...

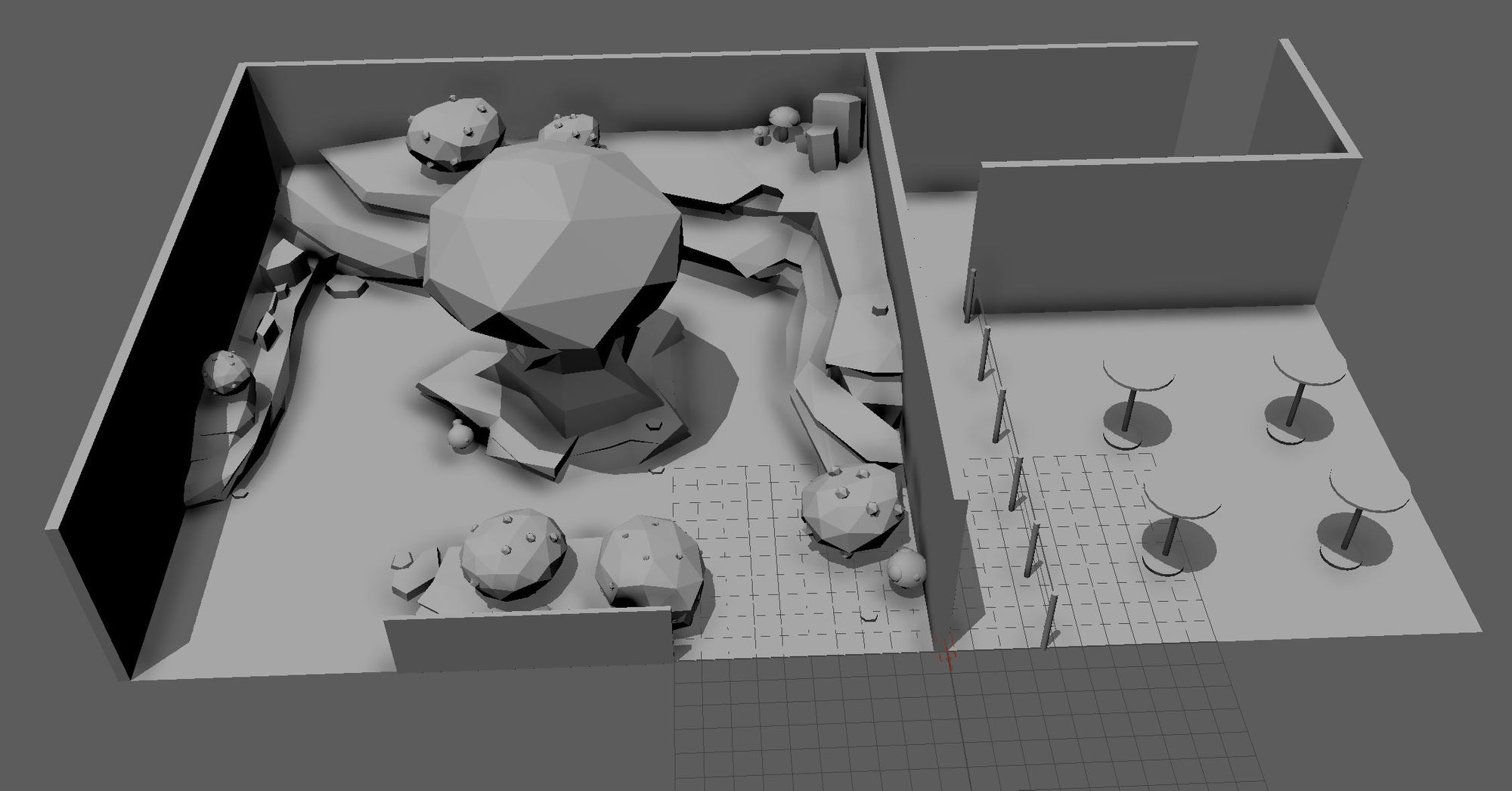

<image: vrla_3d_booth_design.JPG><image: vrla_2_side_open_darker.jpg>

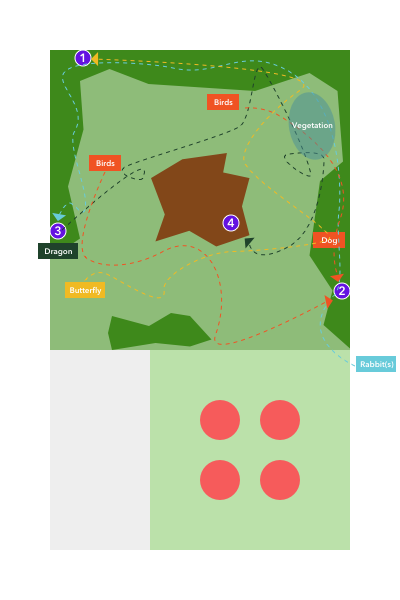

...and ultimately settled on the one below as it checked off all the requirements.<image: vrla_userflow.jpg>

To make the flow possible, we had to rotate the entire booth 90 degrees in the convention center.

To provide an efficient and enjoyable experience, timing and prepping the complete user flow on set was critical. We calculated it was optimal to send 4 visitors into the experience at a time and the 'Hunt' itself needed to be under 4 minutes in length. This meant every 4 visitors would spend a total of approximately 10 minutes to go through the setup, the 'Hunt' and the photo booth.

Users Test Different Aesthetics and Styles

Choosing the aesthetic theme of virtual elements in the experience was also important. The set had a specific look resembling a somewhat retro, Super Mario 64 feel, and we took some time deciding to either go with a realistic virtual theme which stood out more on set, or an angular/low-poly cartoon theme which matched the set.

We put a few sample models together and created two test scenes to switch between the different characters. After getting opinions from testers as well as internal team members, we decided to go with the matching cartoony theme.

In-experience game mechanics

We believe in user testing, and we put a lot of our assumptions to the test by seeking validation and feedback from end users.

Is the Egg Hunt fun?

Validating the core game-mechanic was easy to overlook in the midst of a very busy project, but we needed to find out if finding virtual eggs in the user's space was exciting. So, during our mixed reality meet-up in March, we put together a very simple HoloLens demo where we placed a few eggs in the room that opened when users gazed on it.

After over a dozen tests, we learned that the gaze-based game interaction worked but the game itself lacked in exciting users. When we introduced a timer, we were able to increase the level of urgency, but there was a larger void.

We needed to add more meaning to this experience and for that, we looked back at our documentation of ideas from our previous Envisioning process. It was clear to us that we needed somehow incrementally build tension as users progressed through the experience and provide a finale to end it all.

The Story

We had a plethora of ideas. For example, having animated cartoon characters that ask each visitor for help in retrieving their stolen eggs from an evil villain snake; or making a competition among the 4 visitors to collect the most eggs. For many of these ideas to work as well as we would have liked, we needed more time.

Also, for a lot of us, some of our most magical experiences have been We experimented with the concept of, “underworld”. The idea was for the big reveal to be a form of X-Ray vision. Suddenly, visitors would be able to see inside the trees . The trees would be full of little rooms for squirrels or rabbits. This would require us to turn off spatial mapping and have a good scan of the set to create holes in. There was discussion to get a LIDAR scan, but nothing was locked in. So we pushed that idea aside.

The important part was to make sure we came up with a reliable way to guide the visitors through the experience in a timely fashion. Since we already had ambient animals as part of the experience, we decided to attempt at creating a timed sequence of different animals that guide users to each egg. Although it's not a shared experience, meaning each user would be opening their own eggs at different times, we could start all four user experiences simultaneously so eggs and animals appeared in the same places and at the same time for everyone.

So we did our second round of tests using the timed logic.

The great thing about the timed sequence was that it mimics the attributes of a shared experience. When the dog barked, everyone heard it at the same time and coming from the same place on set.

We used a butterfly, dog, rabbit and a dragon, all rigged with basic animations. We additionally created and recorded a narration to guide the users during the 3 minute experience.

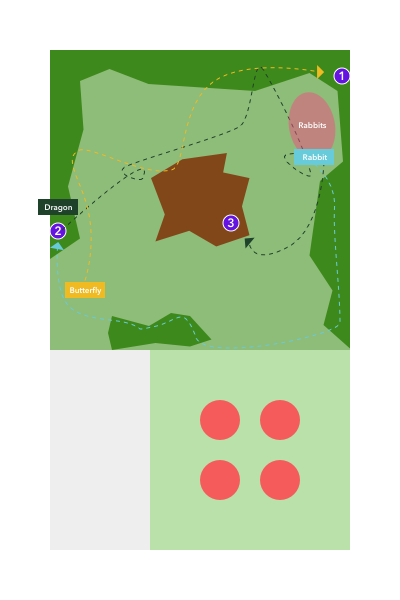

Below is the first animation path we tested.

It ended up being too long, so we removed an egg and accelerating the animations to fit our time constraints:

At that point, we started testing the timing on set (which was still being built).:

Overall, users responded positively to this flow. The timed animal guides combined with the narration tended to create a more cohesive response from users. Most users bunched up together and walked through the set which has its perks in providing a sense of purpose but it simultaneously took away from the element of discovery.

The Final Experience

Due to time constraints, we settled on a good medium for the final experience. We minimized the guidance to be handled largely by the narration and decided to spend the rest of our resources working on the ambient animals and objects in the scene for visitors to explore and be mesmerized by.

In the end, a dragon stole the show. As a visitor opened one of the eggs (which we strategically placed so they would most likely open it towards the end of their hunt), a dragon emerges out of the egg and flies dynamically around the 18 ft. tall tree in the center of the set. For the finale, fireworks shot off in the distance as user all gathered around for their photo booth shot as the experience concluded.

Modeling and Animations

It was important for us to make the egg-opening animation awesome as it was the one interaction every user repeats throughout the experience on multiple occasions. Ultimately, all eggs looked like large, regal gems that opened up like a puzzle and sent a colorful shockwave into it's surrounding landscape. It was beautiful. Other large modeling and animation sequences included the dragon flight, the bunnies fleeing from the set as users got close to them and flowers blooming as visitors gazed at them within proximity. Although we didn't change the animations in the scene a lot, its fair to say it took a fair amount of work and on-site testing to get the look right.

Building the set

Coding the Experience (lets get more technical here)

Coding tests

We had code tests for the story and experience multiple times per week. Each experience needed to get coded in order to perform these tests, so we had a full-time developer devoted to writing tests and used unfinished assets and assets purchased on the asset store at times when necessary.

Coding the final optimized version

As mentioned earlier, it took a good chunk of out time to get all the final animation and lights to look and feel right. All of that without exceeding the technical limits of the HoloLens.

We tested the limit by loading as many assets into the experience as it could handle and looked for the threshold where we'd begin to see a significant drop in frame rate. We then made sure not to hit that threshold to steer away from any possibilities of performance issues during the experience.

Photo booth

The photo booth was a last minute addition to the experience and it was something we had in our Envisioning outcome as a stretch goal. The closer we moved towards the event date, the more we felt it needed to be in the experience to provide closure.

The idea was to offer participants a group photo at the end of the experience including all of the virtual elements in egg hunt.

It turned out to be a neat concept as we provided participants with something they can share on their social channels.

We created a small client browser based application that would grab the photo taken from a HoloLens using the portal API.

Running the Experience

Building the experience was one part, running the experience was a whole other beast. The HoloLens is designed for the workplace, so designing an entertaining consumer experience was new and challenging.

Requirements

- Clean the HoloLens for each use

- Keep count of all HoloLens throughout the expo

- Personalized IPD measurements for every visitor

- 3-minutes limit to setup & brief 4 to 5 users

- Keep the HoloLenses charged

- Confirm the spatial mapping is aligned on each device, per use

Staff roles on demo floor

We defined 5 staff roles to run a well-oiled demo station:

1. IPD measurement

The first staff role was to stand at the end of the line and measure everyone's IPD, take note of them and hand it off to the tech staff. We regularly had two member out doing this as it was a good opportunity to answer questions about the experience and generally get the participants hopes up before entering the experience.

We originally used the Kinect IPD. It was less intrusive and really but it took too much time per user, so we scrapped it and went manual.

< picture of the kinect IPD >

2. Briefing & fitting

Four people (up to six at times) got sent into the setup area with their IPD measurements where the briefing & fitting staff assisted in fitting the uniquely measured HoloLenses onto every visitor's head. In order to assure the alignment wasn't off, we placed a green virtual butterfly on one of the walls which we asked them to confirm. If the butterfly wasn't there, we had to relaunch the application for the visitor.

Once all the devices were fitted securely on everyone's head, the staff member stands in front of them to give an introduction to the experience and makes sure the previous group of participants are donewith their on-set experience before instructing the next group to walk onto the set.

As soon as the group walks onto the set, the next four participants were pulled into the setup area.

3. Tech

The tech attendant was in charge or starting the application on all devices and customize the IPD for every participant. As soon as the measurements came into the setup area, they were in charge of plugging the each HoloLens into the laptop to set the IPD and place it on the table with the corresponding visitor's name tag on it so it was ready to be taken and fitted on by briefing & fitting.

4. Device maintenance

We had a total of 20 HoloLenses and it was crucial to make sure none of them were out of sight at anytime during the expo. We regularly had 2 staff members at a time for device maintenance as one needs to keep an eye on the devices on set and collect all of them when every group was done, while the other was cleaning each device after every use and made sure the devices were on a smooth rotation in order to maintain adequate battery life.

5. Photo booth

The photo booth staff posted up next to a laptop right outside of the set area in an ideal location to shoot the end photo from. As a group of participants were ending the experience, this staff member took a group shot of all of them using his/her HoloLens and gathered all of the participants around the computer to captured their email addresses.

When participants were done inputting their email addresses they all got delicious dark chocolate bars and some willing members took a survey.

Conclusion

This project was indeed an awesome learning experience and a great success. There is a lot of work that goes into running a large demo booth smoothly and now we are more confident to be able to create ones of similar scale.

Check out our Case Study for all the details.